Safe Learning-enable System for Autonomous Vehicle

PI: Dr. Xi Peng (Machine Learning)

Co-PI: Dr. Weisong Shi (Autonomous Vehicle)

Co-PI: Dr. Chengmo Yang (Hardware)

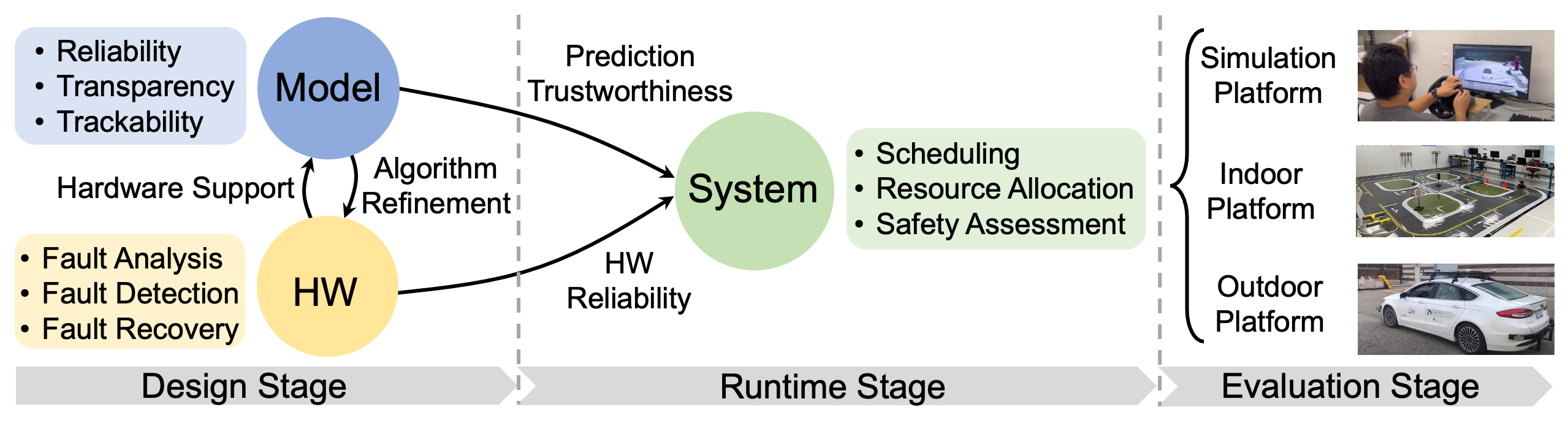

The proposed OSLA (Orchestrated Safe Learning for Autonomous driving) system.

Abstract: Machine Learning (ML) has transformed autonomous driving by enabling vehicles to perceive their environment with high precision, make real-time decisions, and operate without human intervention. However, unsafety may stem from the model—such as inappropriate extrapolation in unique scenarios—the hardware, which suffers from faults and errors, or the system, where the real-time operating system (RTOS) may not deliver decisions in time. Developing a safe learning-enabled system for autonomous vehicles (AVs) requires orchestrating the model, hardware, and system. This project focuses on cross-layer optimizations to achieve end-to-end safety by developing rational ML models with valid rationales, integrating hardware reliability into ML design to tolerate runtime faults, and designing an RTOS scheduler that ensures time predictability while considering model and hardware reliability. Implementing these advancements on real autonomous driving platforms will enhance AV safety, promote efficient transportation, and advance education and workforce development in AI and autonomous driving with a commitment to diversity and inclusion in STEM fields.

Publications:

- [ICML'25] Mengmeng Ma, Tang Li, Yunxiang Peng, Lu Lin, Volkan Beylergil, Binsheng Zhao, Oguz Akin, Xi Peng. “Why Is There a Tumor?”: Tell Me the Reason, Show Me the Evidence. In International Conference on Machine Learning, 2025. [PDF] [Code]

- [AAAI'25 Oral] Kien X. Nguyen, Tang Li, Xi Peng. Interpretable Failure Detection with Human-Level Concepts. In IEEE International Conference on Intelligent Transportation Systems, 2025. [PDF] [Code]

- [NeurIPS'24] Tang Li, Mengmeng Ma, Xi Peng. Beyond Accuracy: Ensuring Correct Predictions with Correct Rationales In Proceedings of Advances in Neural Information Processing Systems, 2024. [PDF] [Code]

- [ECCV'24 Strong Double Blind] Tang Li, Mengmeng Ma, Xi Peng. DEAL: Disentangle and Localize Concept-level Explanations for VLM. In European Conference on Computer Vision, 2024. [PDF] [Code]

- [ITSC'25] Boyang Tian and Weisong Shi. Context-aware Risk Assessment and Its Application in Autonomous Driving. In European Conference on Computer Vision, 2024. [PDF]

Open-Sourced Data:

- Prediction Rationale Dataset for ImageNet: We construct a new rationale dataset that covers all 1,000 categories in the ImageNet. For each category, we generate an ontology tree with a maximum height of two. Combining attributes and sub-attributes, this dataset contains over 4,000 unique rationales. [https://github.com/deep-real/DCP/tree/main/Rationale%20Dataset]

Open-Sourced Software:

- Distributionally Robust Explanations (DRE): A framework to enhance machine learning (ML) model robustness against out-of-distribution data. Source code and pretrained models at [https://github.com/deep-real/DRE].

- Rationale-informed Optimization: A toolbox for training ML models to ensure dual-correct predictions. Source code and pretrained weights at [https://github.com/deep-real/DCP].

- Ordinal Ranking of Concept Activation (ORCA): A lightweight, interpretable failure detection toolkit based on concept activation rankings. Codebase at [https://github.com/Nyquixt/ORCA].